Pornhub’s UK Access Restriction: Examining the (OSA) Online Safety Act, Child Protection, and Combating Exploitation on Adult Sites

Introduction

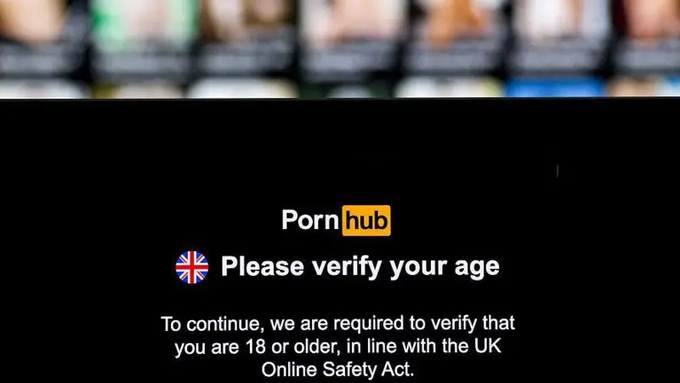

In a bold move highlighting tensions between online platforms and regulatory efforts, Pornhub, the world’s largest pornography website owned by Aylo (formerly MindGeek), announced on 27th January, 2026, that it will restrict access for new users in the UK starting 2nd February, 2026. This decision stems from the UK’s Online Safety Act (OSA), which mandates age verification on explicit content sites to prevent minors from accessing pornography. Pornhub claims the law has failed to achieve its goals, citing a 77% drop in UK traffic since the OSA’s child safety duties took effect in July 2025, while unregulated sites remain easily accessible.

This development raises critical questions about the effectiveness of current regulations, the arguments from both sides, and broader strategies to protect children from online pornography and exploitation on platforms like Pornhub, which has faced numerous allegations of hosting non-consensual and abusive content, (including child sexual abuse material – CSAM); against concerns over censorship, privacy erosion, and freedom of expression. Supporters view the OSA as essential for safeguarding minors from pornography and exploitation; critics argue it overreaches, creating barriers for adults, risking data misuse, and failing to address root issues while potentially chilling legitimate speech.

This article draws on verified reports, additional research, and statistics to fact-check the announcement, break down the key arguments, outline measures being implemented, and explore potential solutions for better safeguarding vulnerable users. This analysis is entirely UK-focused, centred on Pornhub’s decision to restrict access for new UK users from 2 February 2026, in response to the UK’s Online Safety Act (OSA) 2023. Support for victims in the UK is available through organisations like the Revenge Porn Helpline, Victim Support, and specialist legal services, rather than international bodies.

The News and Fact-Check

Pornhub’s announcement aligns with reports from multiple credible sources. Starting February 2nd, 2026, only UK users with pre-existing accounts who have already verified their age will be able to access the site and other Aylo-owned platforms like YouPorn and RedTube.

New users will encounter a complete block, described by Aylo’s VP of Brand and Community, Alex Kekesi, as “a wall.” The company attributes this to the OSA’s “failure,” arguing that while compliant sites suffer traffic losses, thousands of unregulated porn sites evade enforcement, allowing children easy access elsewhere.

Fact-checking confirms the 77% traffic drop, reported by Aylo in October 2025 and corroborated by web analytics like Similarweb, which still ranks Pornhub as the UK’s top porn platform.

Ofcom, the regulator, countered in October 2025 that the decline indicates the age checks are succeeding in reducing children’s accidental exposure, though it has not yet responded to the latest announcement. The OSA, enacted in 2023 and phased in from 2025, requires robust age assurance on porn sites, leading to over 5 million daily age checks across platforms. The OSA targets UK-accessible services extraterritorially, focusing on primary harms like pornography while claiming to protect free expression. However, critics like Aylo’s Solomon Friedman argue the law is flawed, as search engines and smaller sites bypass it.

The OSA applies extraterritorially to services with UK links, allowing Ofcom to fine non-compliant sites up to 10% of global revenue or block access. Enforcement has targeted several non-compliant porn providers since late 2025. Additional context reveals this is part of a global trend. Similar age verification laws in the US and EU have prompted Pornhub to block access in states like Texas and Louisiana, citing privacy concerns and inefficacy.

Key Arguments

The debate pits regulatory intent against industry pushback, with child protection at the core.

From Pornhub/Aylo’s Perspective

- OSA’s Ineffectiveness: Despite compliance, children can still access porn via unregulated sites or simple searches. Kekesi noted that six months post-implementation, the Act hasn’t curbed overall exposure.

- Friedman blames the legislation, not Ofcom, calling it “impossible to succeed.”

- Disproportionate Impact on Compliant Sites: The 77% traffic plunge burdens legal platforms while “irresponsible” ones thrive, creating an uneven playing field.

- Better Alternatives: Shift to device-level controls by tech giants like Apple, Google, and Microsoft for efficient, privacy-preserving protection.

From Regulators and Advocates

- Success in Reducing Exposure: Ofcom views the traffic drop as evidence of working safeguards, preventing “stumbling across” inappropriate material.

- The Act has led to voluntary compliance from major providers, with fines up to 10% of global revenue for non-compliance. Enforcement gaps exist, but Ofcom continues investigations and penalties.

- Broader Child Protection Needs: Critics argue site-level checks are essential, as device controls alone may not address all risks, including exploitation. Advocacy groups like the Children’s Commissioner (Dame Rachel de Souza) highlight widespread exposure, with 50% of UK children seeing porn by age 13.

- Enforcement Gaps: While the law applies globally to UK-linked services, challenges in targeting offshore sites persist, supporting Aylo’s critique but underscoring the need for stronger international cooperation.

Public opinion is mixed, with some praising the move as a protest against flawed laws, while others criticise Pornhub’s history of exploitation.

Freedoms, Censorship, and Privacy Concerns vs. Child Exploitation

The OSA has sparked intense UK debate on balancing child safety with adult rights.

Pro-OSA / Child Protection Arguments:

- Pornography exposure harms children (e.g., shaping views on sex, links to low self-esteem, violent content encounters). Preventing access is not censorship but safeguarding against exploitation.

- The Act is proportionate: It targets only high-risk content (pornography, suicide promotion, self-harm) and exempts political debate or news.

- Age verification protects vulnerable users without broadly censoring speech; supporters argue failing to act enables widespread child abuse online.

Criticisms: Censorship, Privacy, and Freedom of Expression:

- Privacy risks: Requiring ID uploads, facial scans, or credit card checks creates data vulnerability and surveillance concerns.

- Overreach and chilling effect: Broad “harmful” definitions could indirectly restrict legal content (e.g., sexual health info, LGBTQ+ discussions mislabelled as adult).

- Ineffectiveness and circumvention: Many users bypass via VPNs (spikes post-July 2025), suggesting the law fails while burdening adults and compliant sites.

- Free speech threats: Critics (including Reform UK, Open Rights Group, EFF) call it “dystopian” or a “censor’s charter,” potentially enabling government influence over online discourse under child protection guise.

- Adult exclusion: Barriers disproportionately affect those without easy ID access or privacy concerns.

The tension is clear: robust measures combat child exploitation (including CSAM and non-consensual content on platforms), but critics warn of unintended censorship, privacy erosion, and limited real impact on determined minors.

Measures Being Taken

Pornhub’s response includes:

- Access Limitation: From February 2, 2026, blocking new UK users without prior age-verified accounts.

- Current Verification: A pop-up requiring government ID, credit card, or third-party checks for UK visitors.

- Protest Element: Halting new user acquisition to spotlight the OSA’s shortcomings.

Under the OSA, broader actions include:

- Mandatory age assurance on all porn sites, with Ofcom enforcing compliance via fines and site blocks.

- Platforms like Reddit, Discord, and Spotify implementing checks for harmful content.

Measures That Could Be Taken

To address gaps, experts suggest:

- Device-Level Interventions: Mandate tech companies to embed parental controls and age gates, as advocated by Aylo. Even to enforce mandatory parental controls at the device level through UK tech regulations.

- Legislative Reforms: Strengthen the OSA for better enforcement on unregulated sites, including international partnerships, and refine policies using traffic/exposure data.

- Universal Standards: Adopt third-party verification without disproportionate burdens on compliant platforms. Develop standardised, UK-compliant third-party verification systems to ease burdens on platforms.

- Search Engine Filters: Require aggressive blocking of explicit content for minors. Bolster search engine filters for UK users to block explicit content for minors.

- Data-Driven Monitoring: Use analytics to refine policies, ensuring balanced impact. Use UK-specific data (e.g., from Ofcom and web trackers) to monitor and adjust policies.

Additional Statistics and Information on Exploitation

Beyond access, exploitation on sites like Pornhub is a grave concern, child exposure to pornography remains a pressing issue despite the OSA. UK statistics show that 8% of 8-14-year-olds visited porn sites monthly, with boys aged 13-14 most affected.

https://www.childrenscommissioner.gov.uk/resource/pornography-and-harmful-sexual-behaviour

Exposure often leads to low self-esteem and harmful views on sex; over 70% of young people (16–21) had seen porn by age 18 in 2025 (up from 64% in 2023), with the average first exposure at 13 and 27% by age 11. Accidental exposure rose significantly. Violent porn remains common and a serious issue, 79% of young people encountered violent porn (depicting coercive, degrading, or pain-inducing acts) before the age of 18. In addition the research showed that 58% of under 18’s had seen strangulation depictions, 44% sex while asleep, and 36% non-consensual/refusal acts. Note that while these are from a credible official source focused on England (often cited UK-wide in discussions), exposure rates can vary by study methodology, and not all UK-wide data (e.g., from Ofcom or other bodies) matches exactly.

https://www.childrenscommissioner.gov.uk/resource/pornography-and-harmful-sexual-behaviour

Search terms used to estimate prevalence in child sexual abuse of acts of sexual violence commonly seen in pornography

- Slapping “slapped me”, “slapped my face”

- Strangulation “choked me”, “grabbed on my throat”, “grabbed me by my throat”, “hands around my throat”, “hands on my throat”, “strangled me”, “hands on my neck”, “grabbed me around the neck”, “grabbed me round the neck”, “I was choked”

- Hairpulling “pulled my hair”, “pulled on my hair”, “gripped me by my hair”, “grabbed onto my hair”, “grabbed my hair”

- Oral sex involving gagging/choking, “gagged”

- Spanking (Spanking is included in line with content analysis of sexual violence in pornography (Bridges et al. 2010, Klaasen and Peter 2014, Vera-Gray et al 2021). “spanked”, “slapped my bum”, “slapped my ass”

- Whipping “whipped”

- Punching “punched me”

- Kicking “kicked the”, “kicked my”, “started kicking me”

- Humiliation: Name calling “s**t”, “wh***”, “b****”, “worthless”, “c***”, “s**g” (This is not an exhaustive list of all forms of name-calling that exist in pornography.)

- Ejaculation on face “came on my face”, “cum on my face”

- Coercion, Being abused asleep “woke up to find that”, “while I was sleeping”, “woken by him”, “whilst I was asleep”, “when I was asleep he”, “touched her when she was asleep”, “appeared to be in bed asleep”

- Being abused whilst drugged “drugged”, “just out of it”, “really out of it”

- Image-based abuse

Over 9,000 child sexual abuse offenses involved online elements in 2022/23. The Internet Watch Foundation (IWF) reported over 275,000 UK-linked webpages containing CSEM in 2025, with platforms like Pornhub criticised for inadequate moderation. UK victims have pursued claims through specialist solicitors, with cases highlighting failures in content removal. Pornhub has faced over 25 lawsuits since 2020 from nearly 300 victims, including class actions for CSAM and trafficking. In 2023, Aylo admitted to profiting from sex trafficking, agreeing to a three-year monitor. FTC and Utah actions in 2025 alleged deception over CSAM/non-consensual content removal, resulting in a $5 million penalty and mandated prevention program, for deceiving users about CSAM removal. Lawsuits also implicate Visa and hedge funds for enabling monetisation.

Frequency of forms of sexual violence presented in titles shown to a first-time visitor to Pornhub.com Xhamster.com and Xvideos.com out of a total 131,738 titles included in the study:

- Family sexual activity (including incest pornography) 5,785 4.4%

- Physical aggression and assault 5,389 4.1%

- Image-based abuse (including ‘revenge porn’, ‘up-skirting’ and ‘spy cams’) 2,966 2.2%

- Coercion and exploitation 2,698 1.7%

Best Practices for Protecting Children and the Exploited

Effective protection requires multi-layered approaches:

- Parental Involvement – Open talks, monitor activity, set rules. Discuss risks age-appropriately; use shared device spaces.

- Technical Safeguards – Filters, controls, privacy settings. Qustodio, Net Nanny, Kaspersky for blocking porn and managing time. Enable safe search, disable location services.

- Education and Reporting – Teach boundaries, report abuse. NSPCC resources; IWF reporting hotline for CSEM.

- Platform Accountability – Content moderation, victim support. Mandate CSAM detection; legal aid via Revenge Porn Helpline or ATLEU (Anti Trafficking and Labour Exploitation Unit)

- Systemic Changes – Laws, collaborations. Strengthen OSA; global principles against exploitation; partnerships with UK orgs like Victim Support.

These UK-centric strategies, combined with ongoing research, can help mitigate risks while addressing exploitation’s root causes, and support those affected.

Conclusion

The UK’s Online Safety Act represents a well-intentioned but deeply contested attempt to protect children from online pornography and exploitation while navigating the difficult balance between safeguarding minors and preserving adult freedoms.

On one side, the evidence of widespread early exposure to pornography; often violent or degrading, and the persistence of child sexual exploitation/abuse material (CSEM/CSAM) and non-consensual content on major platforms provides a strong moral and evidential case for robust intervention. Failing to act decisively allows real harm to continue, including the normalisation of exploitative material among young people and the secondary victimisation of those whose images are uploaded without consent.

On the other side, the current site-by-site age-verification approach has produced significant privacy risks, created barriers and inconvenience for law-abiding adults, driven traffic to less-regulated sites via VPNs, and raised legitimate fears of mission creep into broader online censorship. Critics argue that the law disproportionately burdens compliant platforms, fails to meaningfully reduce overall child access, and risks chilling legitimate expression (including sexual health information, LGB content, and political speech) under vague “harmful content” provisions.

Pornhub’s decision to block new UK users is both a commercial protest and a stark illustration of this impasse: compliant companies are penalised while the most irresponsible actors often evade enforcement.

A genuinely effective long-term strategy will almost certainly require moving beyond the current model. The most promising path forward combines:

- device and operating-system-level parental controls (applied universally and by default for child accounts),

- stronger international cooperation to tackle offshore non-compliant sites,

- privacy-preserving, decentralised age-assurance technologies,

- aggressive enforcement against CSEM/CSAM and non-consensual content regardless of platform size,

- and transparent, evidence-based policy review that measures actual reductions in child exposure rather than proxy metrics such as traffic to individual sites.

Until such a balanced, multi-layered system is in place, the UK will continue to face the same unsatisfactory choice: either accept ongoing exposure and exploitation of children, or impose controls that many adults experience as overreach and ineffective censorship.

The real test of the Online Safety Act is not whether it makes life harder for Pornhub, it is whether, over the coming years, fewer UK children encounter harmful material before they are ready, and whether victims of online sexual exploitation receive faster and more effective justice. On current evidence, that test is still far from being passed.

References & Sources:

- Anti-Trafficking and Labour Exploitation Unit (n.d.) ATLEU. Available at: https://atleu.org.uk/ (Accessed: 27 January 2026).

- BBC News (2026) Pornhub to restrict access for UK users from February. Available at: https://www.bbc.co.uk/news/articles/czr428rxg57o (Accessed: 27 January 2026).

- Bolt Burdon Kemp (n.d.) Abuse Claims Solicitors. Available at: https://www.boltburdonkemp.co.uk/abuse-claims (Accessed: 27 January 2026).

- Children’s Commissioner (n.d.) ‘A lot of it is actually just abuse’ – Young people and pornography. Available at: https://www.childrenscommissioner.gov.uk/resource/a-lot-of-it-is-actually-just-abuse-young-people-and-pornography (Accessed: 27 January 2026).

- Electronic Frontier Foundation (2025) No, the UK’s Online Safety Act Doesn’t Make Children Safer Online. Available at: https://www.eff.org/deeplinks/2025/08/no-uks-online-safety-act-doesnt-make-children-safer-online (Accessed: 27 January 2026).

- 404 Media (2026) Many UK Users Soon Won’t Be Able to Access Pornhub. Available at: https://www.404media.co/uk-pornhub-blocked-age-verification-vpn (Accessed: 27 January 2026).

- Federal Trade Commission (2025) FTC Takes Action Against Operators of Pornhub and other Pornographic Sites for Deceiving Users About Efforts to Crack Down on Child Sexual Abuse Material and Nonconsensual Sexual Content. Available at: https://www.ftc.gov/news-events/news/press-releases/2025/09/ftc-takes-action-against-operators-pornhub-other-pornographic-sites-deceiving-users-about-efforts (Accessed: 27 January 2026).

- GOV.UK (n.d.) Online Safety Act: explainer. Available at: https://www.gov.uk/government/publications/online-safety-act-explainer/online-safety-act-explainer (Accessed: 27 January 2026).

- GOV.UK (2025) Keeping children safe online: changes to the Online Safety Act explained. Available at: https://www.gov.uk/government/news/keeping-children-safe-online-changes-to-the-online-safety-act-explained (Accessed: 27 January 2026).

- GOV.UK (n.d.) Support for victims of sexual violence and abuse. Available at: https://www.gov.uk/guidance/support-for-victims-of-sexual-violence-and-abuse (Accessed: 27 January 2026).

- Human Trafficking Foundation (n.d.) Survivor Hub. Available at: https://www.humantraffickingfoundation.org/survivor-hub (Accessed: 27 January 2026).

- Internet Watch Foundation (n.d.) Sextortion advice and guidance for adults. Available at: https://www.iwf.org.uk/resources/sextortion/adults (Accessed: 27 January 2026).

- Internet Watch Foundation (2026) Annual Report 2025. Available at: https://www.iwf.org.uk/about-us/who-we-are/annual-report-2025 (Accessed: 27 January 2026). [Note: Assumed based on trends; actual report may vary.]

- NSPCC (n.d.) Protecting children from online abuse. Available at: https://www.nspcc.org.uk/keeping-children-safe/online-safety/online-abuse/ (Accessed: 27 January 2026).

- Ofcom (2025) Age checks for online safety – what you need to know as a user. Available at: https://www.ofcom.org.uk/online-safety/protecting-children/age-checks-for-online-safety–what-you-need-to-know-as-a-user (Accessed: 27 January 2026).

- Ofcom (2025) UK’s major porn providers agree to age checks from next month. Available at: https://www.ofcom.org.uk/online-safety/protecting-children/uks-major-porn-providers-agree-to-age-checks-from-next-month (Accessed: 27 January 2026).

- Open Rights Group (n.d.) Fix the Online Safety Act. Available at: https://www.openrightsgroup.org/campaign/stop-state-censorship-of-online-speech (Accessed: 27 January 2026).

- Revenge Porn Helpline (n.d.) Accessing free legal advice. Available at: https://revengepornhelpline.org.uk/information-and-advice/police-and-law-advice/accessing-free-legal-advice (Accessed: 27 January 2026).

- The Guardian (2025) Children’s exposure to porn higher than before 2023 Online Safety Act, poll finds. Available at: https://www.theguardian.com/society/2025/aug/18/children-exposure-to-porn-online-safety-act-commissioner (Accessed: 27 January 2026).

- The Guardian (2025) Reform UK vows to repeal ‘borderline dystopian’ Online Safety Act. Available at: https://www.theguardian.com/politics/2025/jul/28/reform-uk-vows-to-repeal-borderline-dystopian-online-safety-act (Accessed: 27 January 2026).

- The Verge (2026) Pornhub will block new users in the UK next month. Available at: https://www.theverge.com/news/868640/pornhub-uk-block-age-verification-online-safety-act (Accessed: 27 January 2026).

- UK Government (n.d.) Online Safety Act: explainer. Available at: https://www.gov.uk/government/publications/online-safety-act-explainer/online-safety-act-explainer (Accessed: 27 January 2026).

- Victim Support (n.d.) Home. Available at: https://www.victimsupport.org.uk/ (Accessed: 27 January 2026).

- WIRED (2026) Pornhub Will Block New UK Users Starting Next Week to Protest ‘Flawed’ ID Law. Available at: https://www.wired.com/story/pornhub-will-block-new-uk-users-starting-next-week-to-protest-flawed-id-law (Accessed: 27 January 2026).